translated from avs-device-sdk By PattenKuo(习立风) 2018/11/12

What is the Alexa Voice Service (AVS)?

什么是Alexa语音服务(AVS)?

The Alexa Voice Service (AVS) enables developers to integrate Alexa directly into their products, bringing the convenience of voice control to any connected device. AVS provides developers with access to a suite of resources to quickly and easily build Alexa-enabled products, including APIs, hardware development kits, software development kits, and documentation.

Alexa Voice Service (AVS)允许开发人员将Alexa直接集成到他们的产品中,为任何连接设备带来语音控制的便利。AVS为开发人员提供了一套资源,可以快速轻松地构建支持alexa的产品,包括api、硬件开发工具包、软件开发工具包和文档。

Overview of the AVS Device SDK

AVS设备SDK的概述

The AVS Device SDK provides C+±based (11 or later) libraries that leverage the AVS API to create device software for Alexa-enabled products. It is modular and abstracted, providing components for handling discrete functions such as speech capture, audio processing, and communications, with each component exposing the APIs that you can use and customize for your integration. It also includes a sample app, which demonstrates the interactions with AVS.

AVS设备SDK提供基于c++(11或更高版本)的库,它们利用AVS API为支持alexa的产品创建设备软件。它是模块化和抽象的,提供用于处理离散功能(如语音捕获、音频处理和通信)的组件,每个组件都公开可用于集成并自定义的api。它还包括一个演示与AVS的交互示例的应用程序。

Get Started

开始

You can set up the SDK on the following platforms:

您可以在以下平台上设置SDK:

- Ubuntu Linux

- Raspberry Pi (Raspbian Stretch)

- macOS

- Windows 64-bit

- Generic Linux

- Android

You can also prototype with a third party development kit:

您还可以使用第三方开发工具包作为原型:

- XMOS VocalFusion 4-Mic Kit - Learn More Here

- Synaptics AudioSmart 2-Mic Dev Kit for Amazon AVS with NXP SoC - Learn More Here

- Intel Speech Enabling Developer Kit - Learn More Here

- Amlogic A113X1 Far-Field Dev Kit for Amazon AVS - Learn More Here

- Allwinner SoC-Only 3-Mic Far-Field Dev Kit for Amazon AVS - Learn More Here

- DSPG HDClear 3-Mic Development Kit for Amazon AVS - Learn More Here

Or if you prefer, you can start with our SDK API Documentation.

或者,如果您愿意,您可以从我们的SDK API文档开始 。

Learn More About The AVS Device SDK

了解更多关于AVS设备SDK的信息

Watch this tutorial to learn about the how this SDK works and the set up process.

观看本教程了解这个SDK的工作原理和设置过程。

SDK Architecture

SDK架构

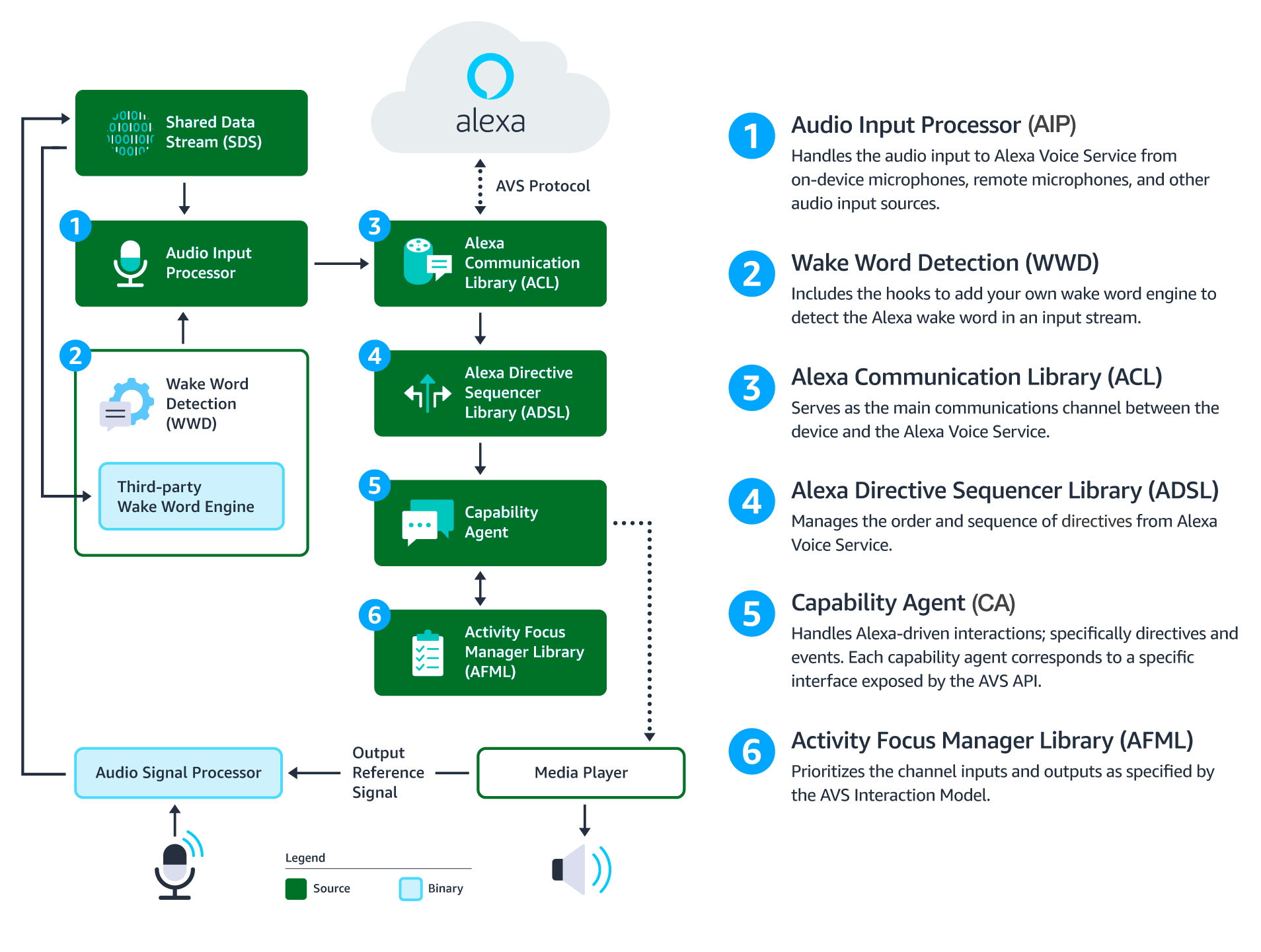

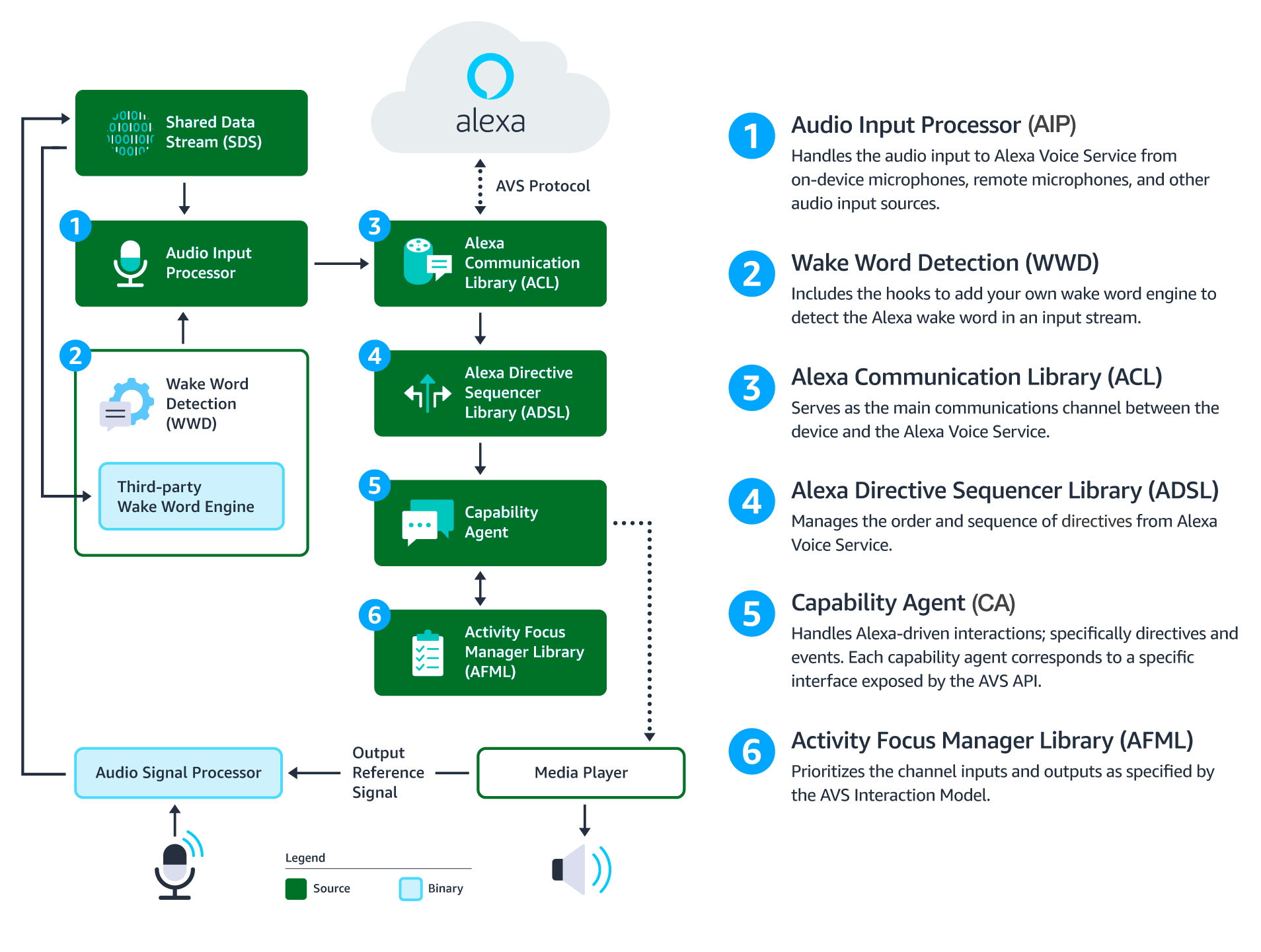

This diagram illustrates the data flows between components that comprise the AVS Device SDK for C++.

这个图表说明了组成c++ AVS设备SDK的组件之间的数据流。

Audio Signal Processor (ASP) - Third-party software that applies signal processing algorithms to both input and output audio channels. The applied algorithms are designed to produce clean audio data and include, but are not limited to acoustic echo cancellation (AEC), beam forming (fixed or adaptive), voice activity detection (VAD), and dynamic range compression (DRC). If a multi-microphone array is present, the ASP constructs and outputs a single audio stream for the array.

音频信号处理器(ASP) -将信号处理算法应用于输入和输出音频通道的第三方软件。应用的算法是为了产生纯净的音频数据,包括但不限于回声消除(AEC)、波束形成(固定或自适应)、语音活动检测(VAD)和动态范围压缩(DRC)。如果存在一个多麦克风阵列,ASP将为阵列构造并输出一个音频流。

Shared Data Stream (SDS) - A single producer, multi-consumer buffer that allows for the transport of any type of data between a single writer and one or more readers. SDS performs two key tasks:

共享数据流(SDS) -一个单一的生产者,多消费者缓冲区,允许在一个写入器和一个或多个写入器之间传输任何类型的数据。SDS执行两个关键任务:

- It passes audio data between the audio front end (or Audio Signal Processor), the wake word engine, and the Alexa Communications Library (ACL) before sending to AVS

- It passes data attachments sent by AVS to specific capability agents via the ACL

- 在发送至AVS之前,它在音频前端(或音频信号处理器)、唤醒词引擎和Alexa通信库(ACL)之间传递音频数据

- 它通过ACL将AVS发送的数据附件传递给特定的功能代理

SDS is implemented atop a ring buffer on a product-specific memory segment (or user-specified), which allows it to be used for in-process or interprocess communication. Keep in mind, the writer and reader(s) may be in different threads or processes.

SDS是在特定于产品的内存段(或用户指定的段)上的环形缓冲区上实现的,这允许它用于进程内或进程间通信。记住,写入器和读取器可能处于不同的线程或进程中。

Wake Word Engine (WWE) - Software that spots wake words in an input stream. It is comprised of two binary interfaces. The first handles wake word spotting (or detection), and the second handles specific wake word models (in this case “Alexa”). Depending on your implementation, the WWE may run on the system on a chip (SOC) or dedicated chip, like a digital signal processor (DSP).

唤醒词引擎(WWE) - 在输入流中发现唤醒词的软件。它由两个二进制接口组成。第一个处理唤醒词发现(或检测),第二个处理特定的唤醒词模型(在本例中为“Alexa”)。根据您的实现,WWE可能在系统上运行在芯片(SOC)或专用芯片上,比如数字信号处理器(DSP)。

Audio Input Processor (AIP) - Handles audio input that is sent to AVS via the ACL. These include on-device microphones, remote microphones, an other audio input sources.

音频输入处理器 (AIP) - 处理通过ACL发送到AVS的音频输入。这些包括设备上的麦克风,远程麦克风,其他音频输入源。

The AIP also includes the logic to switch between different audio input sources. Only one audio input source can be sent to AVS at a given time.

AIP还包括在不同音频输入源之间切换的逻辑。在给定的时间内只能向AVS发送一个音频输入源。

Alexa通信库 (ACL) - Serves as the main communications channel between a client and AVS. The ACL performs two key functions:

** (ACL)** - 作为客户与AVS之间的主要通信通道。ACL执行两个关键功能:

-

Establishes and maintains long-lived persistent connections with AVS. ACL adheres to the messaging specification detailed in Managing an HTTP/2 Connection with AVS.

-

Provides message sending and receiving capabilities, which includes support JSON-formatted text, and binary audio content. For additional information, see Structuring an HTTP/2 Request to AVS.

-

与AVS建立并保持长时间的持久连接。ACL遵循使用AVS管理HTTP/2连接中详细描述的消息传递规范.

-

提供消息发送和接收功能,包括支持json格式的文本和二进制音频内容。有关更多信息,请参见向AVS构造一个HTTP/2请求。

Alexa Directive Sequencer Library (ADSL): Manages the order and sequence of directives from AVS, as detailed in the AVS Interaction Model. This component manages the lifecycle of each directive, and informs the Directive Handler (which may or may not be a Capability Agent) to handle the message.

Alexa指令序列库 (ADSL): 管理AVS指令的规则和顺序,详见AVS交互模型。该组件管理每个指令的生命周期,并通知指令处理程序(它可能是也可能不是功能代理)处理消息。

Activity Focus Manager Library (AFML): Provides centralized management of audiovisual focus for the device. Focus is based on channels, as detailed in the AVS Interaction Model, which are used to govern the prioritization of audiovisual inputs and outputs.

活动焦点管理器库(AFML): 为设备提供集中的视听焦点管理。焦点是基于通道的,如AVS交互模型中详细介绍的,这些通道用于控制视听输入和输出的优先级。

Channels can either be in the foreground or background. At any given time, only one channel can be in the foreground and have focus. If multiple channels are active, you need to respect the following priority order: Dialog > Alerts > Content. When a channel that is in the foreground becomes inactive, the next active channel in the priority order moves into the foreground.

通道可以位于前台或后台。在任何给定的时间,只有一个频道可以在前台和有焦点。如果多个通道处于活动状态,则需要遵循以下优先顺序:对话框>警告>内容。当位于前台的通道变为非活动状态时,优先级顺序中的下一个活动通道将移动到前台。

Focus management is not specific to Capability Agents or Directive Handlers, and can be used by non-Alexa related agents as well. This allows all agents using the AFML to have a consistent focus across a device.

焦点管理并不特定于功能代理或指令处理程序,也可以被非alexa相关代理使用。这允许所有使用AFML的代理在设备上具有一致的焦点。

Capability Agents: Handle Alexa-driven interactions; specifically directives and events. Each capability agent corresponds to a specific interface exposed by the AVS API. These interfaces include:

- SpeechRecognizer - The interface for speech capture.

- SpeechSynthesizer - The interface for Alexa speech output.

- Alerts - The interface for setting, stopping, and deleting timers and alarms.

- AudioPlayer - The interface for managing and controlling audio playback.

- Notifications - The interface for displaying notifications indicators.

- PlaybackController - The interface for navigating a playback queue via GUI or buttons.

- Speaker - The interface for volume control, including mute and unmute.

- System - The interface for communicating product status/state to AVS.

- TemplateRuntime - The interface for rendering visual metadata.

- Bluetooth - The interface for managing Bluetooth connections between peer devices and Alexa-enabled products.

- EqualizerController - The interface for adjusting equalizer settings, such as decibel (dB) levels and modes.

代理能力:处理Alexa-driven交互;具体的指令和事件。每个功能代理对应于AVS API公开的特定接口。这些接口包括:

- 语音识别 - 语音捕捉接口。

- 语音合成 - 用于Alexa语音输出的接口。

- 警报 - 用于设置、停止和删除计时器和警报的接口。

- 音频播放器 - 管理和控制音频播放的接口。

- 通知 - 显示通知指示器的接口。

- 播放控制器 - 通过GUI或按钮导航回放列表的接口.

- 扬声器 - 音量控制界面,包括静音和非静音。

- 系统 - 用于向AVS传递产品状况/状态的接口。

- 模板运行 - 用于呈现可视化元数据的接口。

- 蓝牙 - 管理对等设备和支持alexa的产品之间的蓝牙连接的接口。

- 均衡控制器 - 用于调整均衡器设置的接口,如分贝(dB)级别和模式。

Security Best Practices

最佳安全实践

In addition to adopting the Security Best Practices for Alexa, when building the SDK:

- Protect configuration parameters, such as those found in the AlexaClientSDKCOnfig.json file, from tampering and inspection.

- Protect executable files and processes from tampering and inspection.

- Protect storage of the SDK’s persistent states from tampering and inspection.

- Your C++ implementation of AVS Device SDK interfaces must not retain locks, crash, hang, or throw exceptions.

- Use exploit mitigation flags and memory randomization techniques when you compile your source code, in order to prevent vulnerabilities from exploiting buffer overflows and memory corruptions.

在构建SDK时,除了采用Alexa的安全最佳实践之外:

- 保护配置参数(如json文件AlexaClientSDKCOnfig.json中的配置参数)不受篡改和检查。

- 保护可执行文件和过程不受篡改和检查。

- 保护SDK持久状态的存储不受篡改和检查。

- AVS设备SDK接口的c++实现不能保留锁、崩溃、挂起或抛出异常。

- 在编译源代码时,使用抗攻击标志和内存随机化技术,以防止漏洞利用缓冲区溢出和内存损坏。

Important Considerations

需要考虑的重要事项

-

Review the AVS Terms & Agreements.

-

The earcons associated with the sample project are for prototyping purposes only. For implementation and design guidance for commercial products, please see Designing for AVS and AVS UX Guidelines.

-

Please use the contact information below to-

- Contact Sensory for information on TrulyHandsFree licensing.

- Contact KITT.AI for information on SnowBoy licensing.

-

IMPORTANT: The Sensory wake word engine referenced in this document is time-limited: code linked against it will stop working when the library expires. The library included in this repository will, at all times, have an expiration date that is at least 120 days in the future. See Sensory’s GitHub page for more information.

-

回顾AVS[条款和协议](https://developer.amazon.com/public/solutions/alexa/alex-voicservice/support/termand -agreement)。

-

与示例项目相关的耳机仅用于原型设计目的。 用于商业产品的实施和设计指导, 请看 AVS设计 and AVS UX 指导方针.

-

请使用下面的联系方式-

- 联系 Sensor 有关免费试用许可证的信息。

- 联系 KITT.AI 获取有关“雪人”许可证的信息。

-

注意: 本文中引用的感觉唤醒词引擎是有时间限制的:与之链接的代码将在库过期时停止工作。这个存储库中包含的库在任何时候都有一个过期日期,至少是120天后。有关更多信息,请参阅Sensory’s GitHub页面。

Release Notes and Known Issues

发布记录和已知问题

Note: Feature enhancements, updates, and resolved issues from previous releases are available to view in CHANGELOG.md.

注意:在CHANGELOG.md中可以查看到以前版本的特性增强、更新和解决的问题。

v1.10.0 released 10/24/2018:

Enhancements

- New optional configuration for EqualizerController. The EqualizerController interface allows you to adjust equalizer settings on your product, such as decibel (dB) levels and modes.

- Added reference implementation of the EqualizerController for GStreamer-based (MacOS, Linux, and Raspberry Pi) and OpenSL ES-based (Android) MediaPlayers. Note: In order to use with Android, it must support OpenSL ES.

- New optional configuration for the TemplateRuntime display card value.

- A configuration file generator script,

genConfig.shis now included with the SDK in the tools/Install directory.genConfig.shand it’s associated arguments populateAlexaClientSDKConfig.jsonwith the data required to authorize with LWA. - Added Bluetooth A2DP source and AVRCP target support for Linux.

- Added Alexa for Business (A4B) support, which includes support for handling the new RevokeAuthorization directive in the Settings interface. A new CMake option has been added to enable A4B within the SDK,

-DA4B. - Added locale support for IT and ES.

- The Alexa Communication Library (ACL),

CBLAUthDelegate, and sample app have been enhanced to detect de-authorization using the newzcommand. - Added

ExternalMediaPlayerObserver, which receives notification of player state, track, and username changes. HTTP2ConnectionInterfacewas factored out ofHTTP2Transportto enable unit testing ofHTTP2Transportand re-use ofHTTP2Connectionlogic.

约束

- 新的可选配置均衡器控制器。EqualizerController接口允许您调整产品上的均衡器设置,比如分贝(dB)级别和模式。

- 为基于gstreamerbased (MacOS, Linux,和树莓Pi)和基于OpenSL (Android)的mediaplayer添加了EqualizerController的参考实现。注意:要使用Android,它必须支持OpenSL ES。

- [TemplateRuntime显示卡值]https://github.com/alexa/avs-device-sdk/blob/v1.10.0/Integration/AlexaClientSDKConfig.json#L144)的新可选配置(.

*配置文件生成器脚本,genConfig.sh现在包含在 tools/Install 路径.genConfig.sh并且它的关联参数填充’ AlexaClientSDKConfig。json '与数据需要授权与LWA。 - 增加了对Linux的蓝牙A2DP源和AVRCP目标支持。

- 添加了Alexa for Business (A4B)支持,其中包括在设置接口中处理新的[revokeauth授权](https://developer.amazon.com/docs/alexa-voicservice/system.html #revokeauth)指令的支持。添加了一个新的CMake选项来在SDK中启用A4B,

-DA4B. - 为它和ES添加了区域设置支持。

- 通过增强Alexa通信库(ACL)、“CBLAUthDelegate”和示例应用程序,可以使用新的“z”命令检测取消授权。

- 添加了“ExternalMediaPlayerObserver”,它接收到播放器状态、跟踪和用户名更改的通知。

HTTP2ConnectionInterface从HTTP2Transport去支持HTTP2Transport单元测试 和HTTP2Connection逻辑重用.

Bug Fixes

- Fixed a bug in which

ExternalMediaPlayeradapter playback wasn’t being recognized by AVS. - Issue 973 - Fixed issues related to

AudioPlayerwhere progress reports were being sent out of order or with incorrect offsets. - An

EXPECTING, state has been added toDialogUXStatein order to handleEXPECT_SPEECHstate for hold-to-talk devices. - Issue 948 - Fixed a bug in which the sample app was stuck in a listening state.

- Fixed a bug where there was a delay between receiving a

DeleteAlertdirective, and deleting the alert. - Issue 839 - Fixed an issue where speech was being truncated due to the

DialogUXStateAggregatortransitioning between aTHINKINGandIDLEstate. - Fixed a bug in which the

AudioPlayerattempted to play when it wasn’t in theFOREGROUNDfocus. CapabilitiesDelegateTestnow works on Android.- Issue 950 - Improved Android Media Player audio quality.

- Issue 908 - Fixed compile error on g++ 7.x in which includes were missing.

Bug 修复

- 修正了一个“ExternalMediaPlayer”适配器回放无法被AVS识别的错误。

- Issue 973 - 修正了与“AudioPlayer”有关的问题,在那里进度报告被错误发送或者有错误的偏移量。

- 一个“期望”状态已经被添加到“对话状态”中,以处理“等待语音”设备的“预期语音”状态。

- Issue 948 - 修正了一个bug,在这个bug中,示例应用程序被卡在一个监听状态。

- 修正了接收“DeleteAlert”指令和删除警告之间存在延迟的错误。

- Issue 839 - 修正了“DialogUXStateAggregator”在“THINKING”和“IDLE”状态之间转换的问题。

CapabilitiesDelegateTest现在可以在Android上运行。- Issue 950 - 改进了Android媒体播放器的音频质量。

- Issue 908 - 修正了g++ 7.x上包括丢失的编译错误。

Known Issues

- On GCC 8+, issues related to

-Wclass-memaccesswill trigger warnings. However, this won’t cause the build to fail, and these warnings can be ignored. - In order to use Bluetooth source and sink PulseAudio, you must manually load and unload PulseAudio modules after the SDK starts.

- When connecting a new device to AVS, currently connected devices must be manually disconnected. For example, if a user says “Alexa, connect my phone”, and an Alexa-enabled speaker is already connected, there is no indication to the user a device is already connected.

- The

ACLmay encounter issues if audio attachments are received but not consumed. SpeechSynthesizerStatecurrently usesGAINING_FOCUSandLOSING_FOCUSas a workaround for handling intermediate state. These states may be removed in a future release.- The Alexa app doesn’t always indicate when a device is successfully connected via Bluetooth.

- Connecting a product to streaming media via Bluetooth will sometimes stop media playback within the source application. Resuming playback through the source application or toggling next/previous will correct playback.

- When a source device is streaming silence via Bluetooth, the Alexa app indicates that audio content is streaming.

- The Bluetooth agent assumes that the Bluetooth adapter is always connected to a power source. Disconnecting from a power source during operation is not yet supported.

- On some products, interrupted Bluetooth playback may not resume if other content is locally streamed.

make integrationis currently not available for Android. In order to run integration tests on Android, you’ll need to manually upload the test binary file along with any input file. At that point, the adb can be used to run the integration tests.- On Raspberry Pi running Android Things with HDMI output audio, beginning of speech is truncated when Alexa responds to user TTS.

- When the sample app is restarted and network connection is lost, Alerts don’t play.

- When network connection is lost, lost connection status is not returned via local TTS.

已知问题

- 在GCC 8+上,与’ -Wclass-memaccess '相关的问题将触发警告。但是,这不会导致构建失败,这些警告可以忽略。

- 为了使用蓝牙源和sink PulseAudio,您必须在SDK启动后手动加载和卸载PulseAudio模块。

- 当将新设备连接到AVS时,当前连接的设备必须手动断开。例如,如果用户说“Alexa,连接我的手机”,并且启用了Alexa的扬声器已经连接,那么没有迹象表明用户已经连接了设备。

- 如果接收到但未使用音频附件,“ACL”可能会遇到问题。

- “speech synzerstate”当前使用“GAINING_FOCUS”和“LOSING_FOCUS”作为处理中间状态的变通方法。这些状态可能在将来的版本中被删除。

- Alexa应用程序并不总是指示设备何时通过蓝牙成功连接。

- 通过蓝牙将产品连接到流媒体有时会停止源应用程序中的媒体回放。通过源应用程序恢复回放或切换next/previous将纠正回放。

* 当源设备通过蓝牙流静音时,Alexa应用程序指示音频内容流。

* 蓝牙代理假定蓝牙适配器始终连接到电源。还不支持在运行期间断开电源。 - 在某些产品上,如果其他内容是本地流,中断的蓝牙回放可能无法恢复。

make integration目前Android无法使用。为了在Android上运行集成测试,您需要手动上载测试二进制文件以及任何输入文件。至此,可以使用adb运行集成测试。- 在Raspberry Pi上运行带有HDMI输出音频的Android应用,当Alexa响应用户TTS时,开始的语音就会被截断。

- 当示例应用程序重新启动且网络连接丢失时,警报不会播放。

- 当网络连接丢失时,丢失的连接状态不会通过本地TTS返回。